Echo Show: the power of show and tell

Amazon’s recent announcement of Echo Show – a touchscreen device with Alexa voice technology built in – got a generally favorable reception, but in that reception I observed two things:

- The focus on Echo Show’s video calling capabilities (often to the exclusion of all else).

- The immediate comparisons asking the question, "how is it any different than iPad with Siri?"

In other words, what’s the big deal? When I read this type of reaction I could almost hear the yawn.

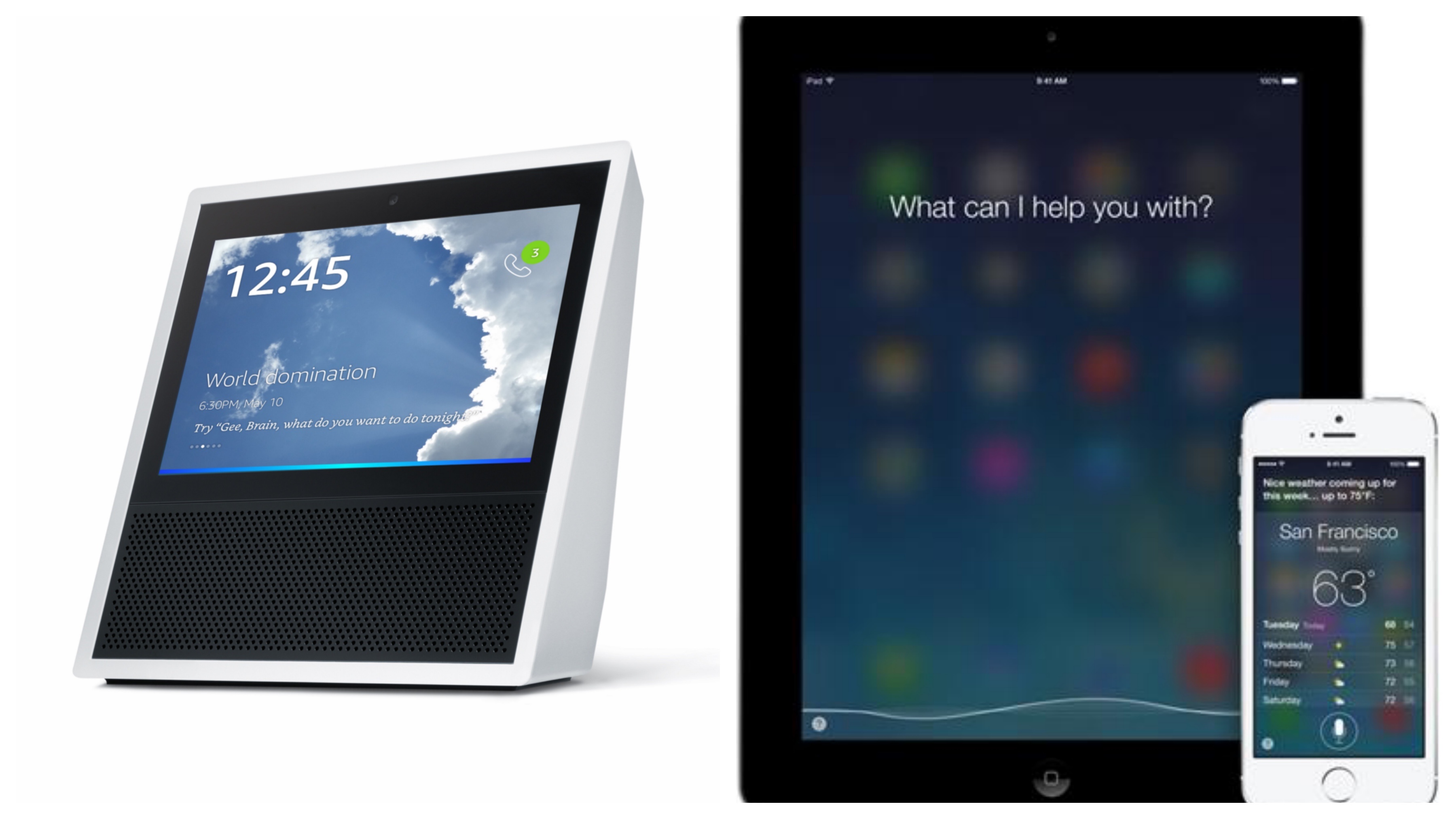

Echo Show = iPad + Siri?

At the superficial level, when you put the products side by side, they are quite similar: screens with a voice assistant.

But I would argue that there’s really quite a profound difference, and if you look beyond the superficial comparisons of Echo Show to other tablet devices that include voice assistants, you can begin to see it. The difference stems from the original design intent and, more importantly, the end user experience.

Voice First -vs- Screen First

The Apple iPad was designed to accomodate a user primarily interacting with it via touch and gestures. In such a screen-first (voice-second) scenario, Siri is used to perform an action that falls outside the purvue of a specific application. Only recently has Apple opened up Siri to developers, allowing third-parties to include voice capabilities in native iOS apps. As such, Siri's most commonly serviced requests are pretty general:

- What time is it?

- Please call [Person]

- Set an alarm/Remove

- Set a timer

On the other hand, Echo Show was designed voice-first (screen-second) and therefore expects skills to use voice as the primary navigation model for skills. The screen doesn't provide an interface to replace voice, it adds a level of user engagement that enhances, enriches, and expands the voice experience.

Real World Use-Cases

Consider informational user queries where the answer to the question at hand is satisfied by voice agent verbally, but the visual experience includes images, videos, and related information that enriches the response.

Curious about a drugs possible side effects? Imagine a voice skill that responds to questions like "What are the side effects of Metformin?" with a direct and immediate verbal answer, but with a companion experience that includes a picture of the medication, a video discussing warning signs, etc.

Also consider applications where these types of voice-first screen-second experiences will naturally excel -- in particular, those situations where a person has a visual or motor impairment that makes a screen-first interaction difficult or impossible.

Imagine a voice skill for the Echo Show that enables people to track whether they’ve taken their medications. With a voice-first device like the Echo Show, the skill can offer a visual experience displaying data on the medication schedule and indicate how well the user has adhered to their treatment plan.

Contrast these all of these experiences with a comparable experience on an screen-first device like an iPad and you'll start to understand the differences between the two.